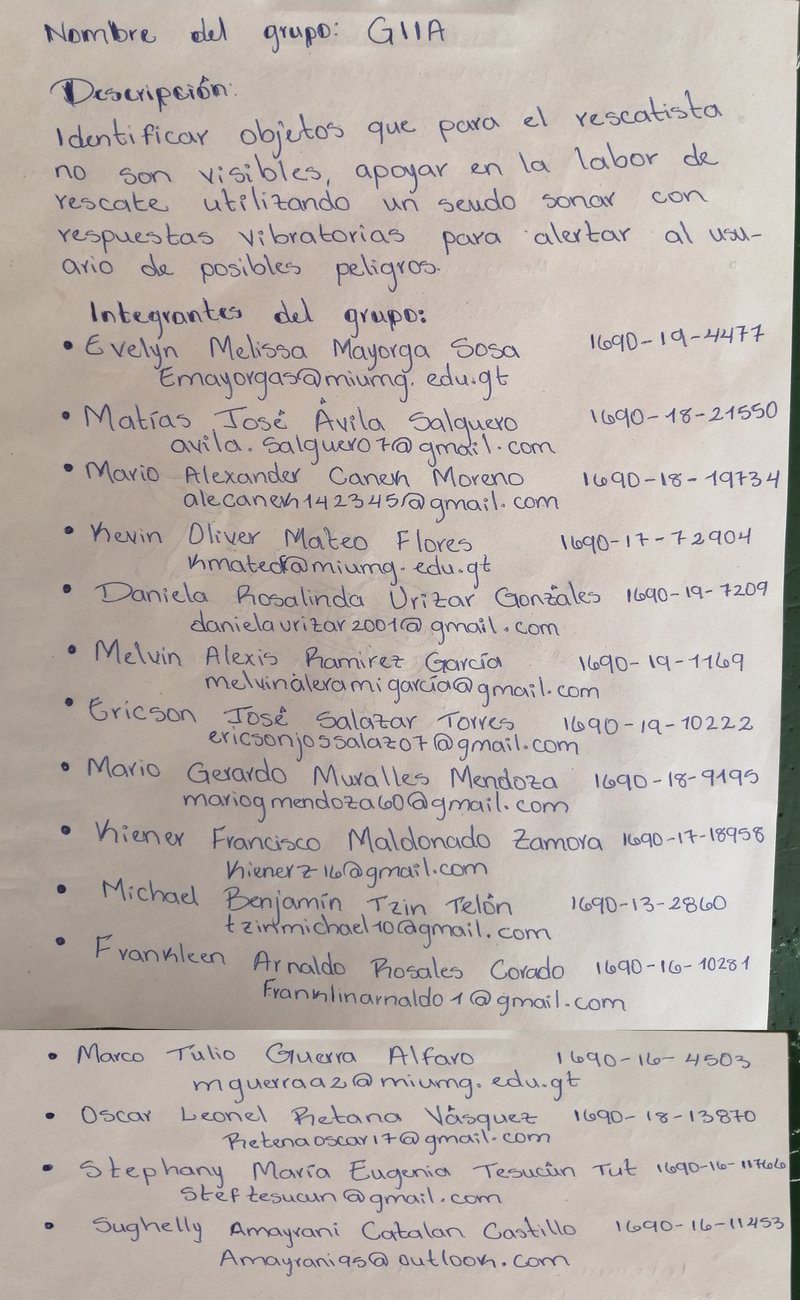

Team Updates

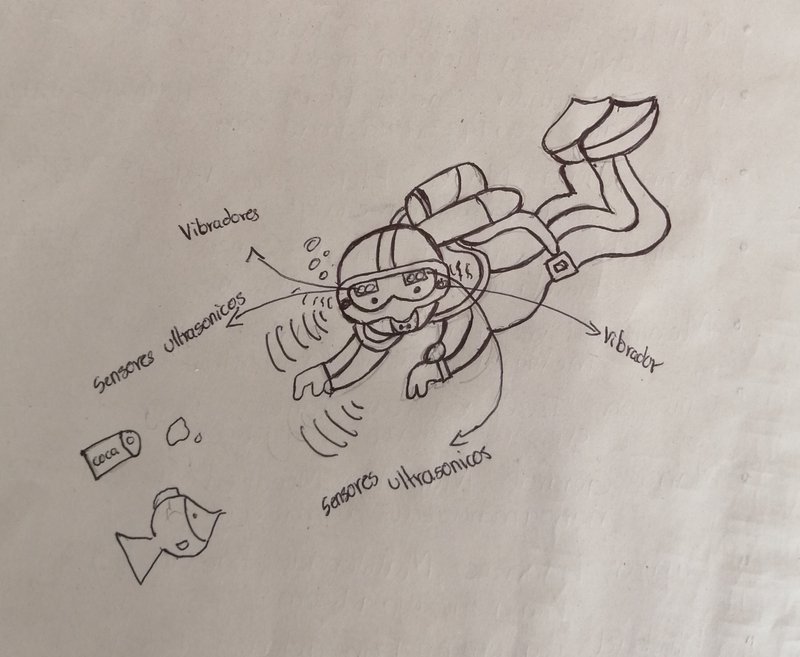

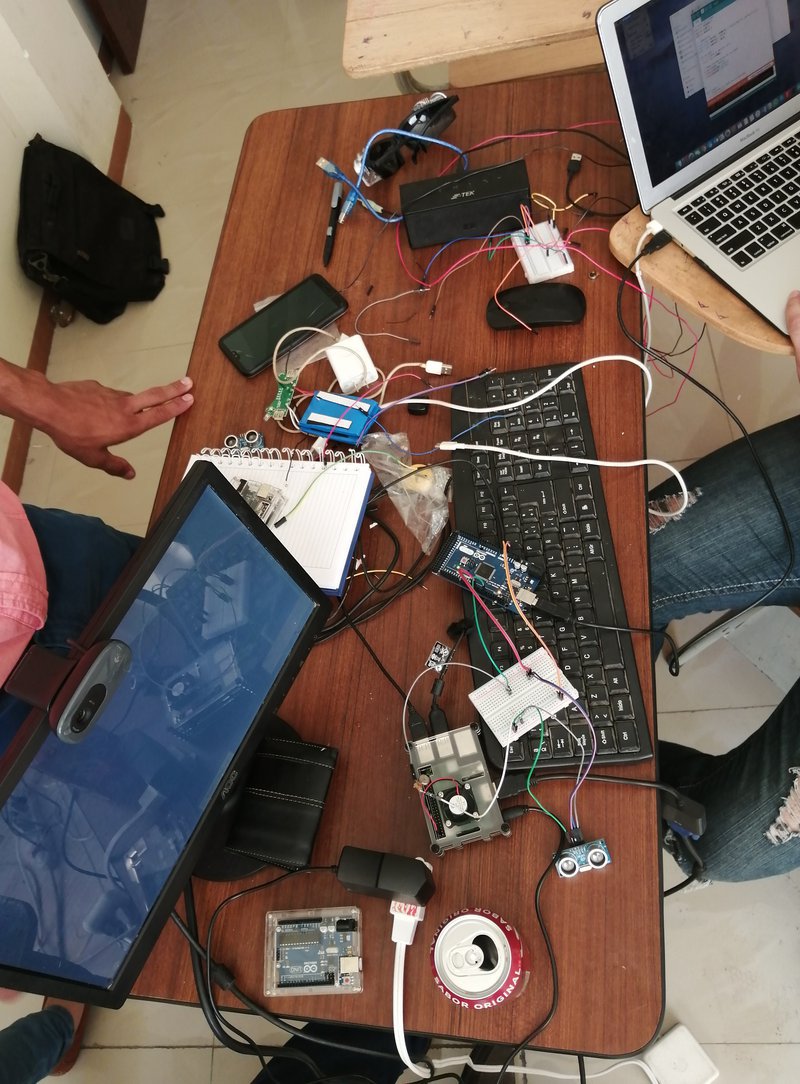

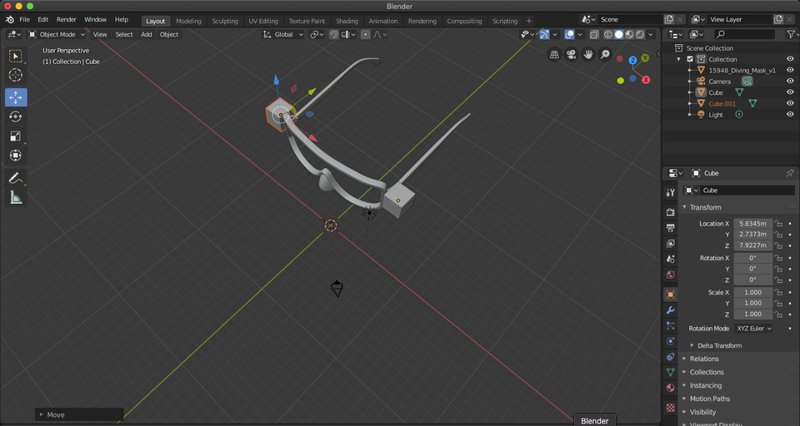

Our project consists of diving lenses made to monitor the underwater bed

It incorporates sensors to take into account the increase in water with the data provided by NASA and with the current results of the seabed measurement.

Data consulted:

https://vesl.jpl.nasa.gov/sea-level/slr-gravity/

https://climate.nasa.gov/vital-signs/sea-level/

Similarly, we collect information and digital images in our own database.

Based on local data on the weather report in case of possible storms and how this affects the significant increase in the sea.

- Get to keep the population informed through social networks, since a large number of people will use it.

- It incorporates own data collected and images to be consulted by any interested person.

- It incorporates own data collected and images to be consulted by any interested person.

WHY LIMIT TO JUST ONE PROBLEM?

GIIA can be incorporated into other situations, stories such as rescue teams as it has a camera and facial recognition that are stored in another specialized database. It also notifies the rescuer of the danger.

We also incorporate them for blind people who with soft vibration motors warn the person of obstacles.

| # import the necessary packages | |

| from picamera.array import PiRGBArray | |

| from picamera import PiCamera | |

| import time | |

| import cv2 | |

| # initialize the camera and grab a reference to the raw camera capture | |

| camera = PiCamera() | |

| camera.resolution = (320, 240) | |

| camera.framerate = 12.0 | |

| rawCapture = PiRGBArray(camera, size=(320, 240)) | |

| # Define the codec and create VideoWriter object | |

| fourcc = cv2.VideoWriter_fourcc(*'XVID') | |

| out = cv2.VideoWriter('output.avi', fourcc, 8, (320,240)) | |

| # allow the camera to warmup | |

| time.sleep(0.1) | |

| # capture frames from the camera | |

| for frame in camera.capture_continuous(rawCapture, format="bgr", use_video_port=True): | |

| # grab the raw NumPy array representing the image, then initialize the timestamp | |

| # and occupied/unoccupied text | |

| image = frame.array | |

| #Load a cascade file for detecting faces | |

| face_cascade = cv2.CascadeClassifier('/home/pi/haarcascade_frontalface_default.xml') | |

| #face_cascade = cv2.CascadeClassifier('/home/pi/project/faces.xml') | |

| #convert to grayscale | |

| gray = cv2.cvtColor(image,cv2.COLOR_BGR2GRAY) | |

| #Look for faces in the image using the loaded cascade file | |

| faces = face_cascade.detectMultiScale(gray, 1.1, 4) | |

| #Draw a rectangle around every found face | |

| for (x,y,w,h) in faces: | |

| cv2.rectangle(image,(x,y),(x+w,y+h),(255,255,0),2) | |

| # show the frame | |

| out.write(image) | |

| cv2.imshow("Frame", image) | |

| key = cv2.waitKey(1) & 0xFF | |

| # clear the stream in preparation for the next frame | |

| rawCapture.truncate(0) | |

| # if the `q` key was pressed, break from the loop | |

| if key == ord("q"): | |

| break | |

| // Pines utilizados | |

| #define TRIGGER 5 | |

| #define ECHO 6 | |

| #define BUZZER 9 | |

| // Constantes | |

| const float sonido = 34300.0; // Velocidad del sonido en cm/s | |

| const float umbral1 = 175.0; | |

| const float umbral3 = 50.0; | |

| void setup() { | |

| // Iniciamos el monitor serie | |

| Serial.begin(9600); | |

| // Encendido LED CIRCUITO CONTINUO | |

| pinMode (13,OUTPUT); | |

| // Modo entrada/salida de los pines | |

| pinMode(ECHO, INPUT); | |

| pinMode(TRIGGER, OUTPUT); | |

| pinMode(BUZZER, OUTPUT); | |

| } | |

| void loop() { | |

| //SALIDA ANALOGICA LDR | |

| if (analogRead(0) > 150) | |

| {//CONVERCION DIGITAL | |

| digitalWrite(13,HIGH); | |

| } | |

| else{//REGRESA DATOS | |

| digitalWrite(13,LOW); | |

| } | |

| // Preparamos el sensor de ultrasonidos | |

| iniciarTrigger(); | |

| // Obtenemos la distancia | |

| float distancia = calcularDistancia(); | |

| if (distancia < umbral1) | |

| { | |

| alertas(distancia); | |

| } | |

| } | |

| void alertas(float distancia) | |

| { | |

| if (distancia < umbral1 ) | |

| { | |

| tone(BUZZER, 2000, 150); | |

| } | |

| else if (distancia <= umbral3) | |

| { | |

| tone(BUZZER, 4000, 75); | |

| } | |

| } | |

| float calcularDistancia() | |

| { | |

| unsigned long tiempo = pulseIn(ECHO, HIGH); | |

| float distancia = tiempo * 0.000001 * sonido / 2.0; | |

| Serial.print(distancia); | |

| Serial.print("cm"); | |

| Serial.println(); | |

| delay(250); | |

| return distancia; | |

| } | |

| void iniciarTrigger() | |

| { | |

| digitalWrite(TRIGGER, LOW); | |

| delayMicroseconds(0.5); | |

| digitalWrite(TRIGGER, HIGH); | |

| delayMicroseconds(2); | |

| digitalWrite(TRIGGER, LOW); | |

| } |