Planet Captain| From Curious Minds Come Helping Hands

Project Details

Awards & Nominations

Planet Captain has received the following awards and nominations. Way to go!

The Challenge | From Curious Minds Come Helping Hands

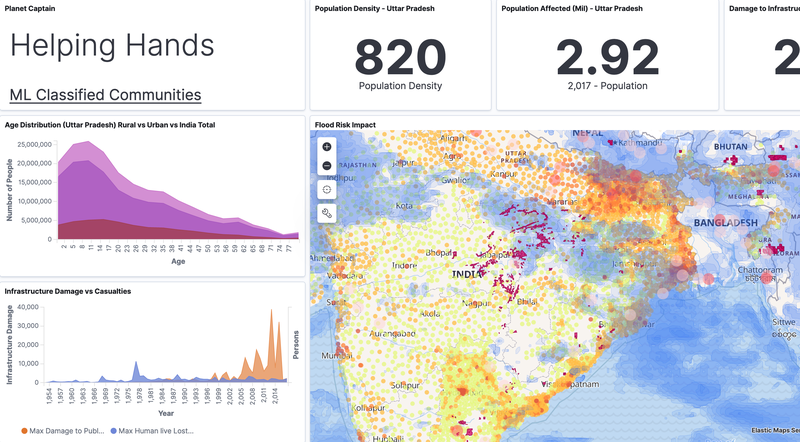

Floodml by Planet Captain

Our solution is a live monitoring web based application which allows humanitarian workers on the ground to make data driven decisions and help at risk communities as best they can.

Background

This year 7 million people have been displaced by extreme weather events.

Floods are a massive global crisis worldwide causing over 40 billion dollars in damage in the last year. The impact of these floods are only worsening with the affects of climate change with this century having a 130% increase in rainfall disruption.

It is becoming more and more important to be able to detect and find at risk communities however there are many challenges associated with this.

- Communities which at risk include temporary housing. These communities often change location frequently with little notice, which means it is difficult to locate and provide help when required.

- Census Data is often inaccurate and outdated. The last census in India was carried out in 2011. There is also an estimated of 58 million people who are currently unaccounted for in India.

- Data scale - In todays world we have a saturation of data. It can be challenging to make use of the data in the most appropriate and effective manner to make valid correlations.

What it does

Our solution is a live monitoring web based application which allows humanitarian workers on the ground to make data driven decisions and help at risk communities as best they can. During the Hackathon we focused on the state of Uttar Pradesh in India as it faces many of the challenges addressed by our solution.

Our solution addresses these challenges with the following:

1. We developed an ML dataset of communities in Uttar Pradesh India to classify at risk areas from satellite imagery data.

2. We used this dataset to augment an ML image detection model to classify at risk communities living in temporary housing.

3. We have integrated multi-modal datasets with live satellite imagery in order relay relevant information associated with flood prone areas.

4. We have provided relevant statistics such as demographics to assist aid workers in discerning at risk people.

5. Created a user friendly interface making the data intuitive and easy to access.

ML Model Development:

A huge quantity of information can be down linked from NASA's Earth observing satellites. Machine learning, in the form of computer vision was identified as a promising method for discerning the location of communities. A number of object detection libraries were explored, however a masking algorithm was considered useful for identifying the boundaries and therefore size of each community. The MASK-RCNN library was selected, based on the group having prior familiarity, its use of tensorflow and its high performance.

The ML model was trained on a series of hand annotated satellite images, using the VGG Image Annotator tool which is open source. A total of 25 images were used, which were split in half for retraining (i.e augmenting existing weights from a pre-trained object detection model) and validation. It was expected this would be too small a sample size for real world use, however for demonstration purposes the model could be over-trained on the images and used to identify communities within Uttar Pradesh.

The masks from the machine learning model were exported as vertices into a JSON file. These were defined in terms of their pixel location, which had to be scaled into meters, then into lat and long coordinates. The resulting polygons were then parsed into GeoJSON files which could then be super imposed onto a map alongside other relevant datasets.

NASA Resources

Satellite Images pulled from NASA's zoom.earth which provides access to NASA satellite images updated everyday. We used these images to curate the ML training dataset

NASA Precipitation Measurement Missions (PMM) data was pulled for landslide and rainfall data over India. This was used to provide additional metrics to help the identification of at-risk communities based on current weather systems.

Space Apps Offers

Used Google Cloud Platform credits to host app.

Future plans

Global Expansion:

- Improve ML model to detect temporary and rural communities within different cultures as these are not necessarily consistent. Our current ML only predicts on rural housing, and we wish to expand this to temporary homes.

- Expanding to pull global datasets

- Platform scalability to handle the immense data loads associated on the global scale. (Google Earth Engine)

Other Disaster Events:

- Provide wider range of additional metrics to support the identification of at-risk communities due to other natural disasters. (i.e. drought, fires, extreme weather systems)

- Incorporate with the longer lasting affects of natural disasters.

Infrastructure:

- Using similar machine learning techniques we hope to be able to identify communities dependent on infrastructure (roads/rail/pipelines) which is at risk of being damaged by natural disasters.

- Identifying at risk infrastructure could allow for preparation to reduce the impact and flag the community as less accessible and thus at greater risk.

Coordinating Relief Efforts

- Interface with first responders to help orchestrate relief efforts by specifying their definition of a community at risk, as well as available resources to provide aid.

built with

Hosted on Google Cloud Platform

Utilizes the ELK stack built on an elasticsearch database with kibana for visualizations.

Uses a MaskRCNN model which has been retrained with a custom curated dataset of remote communities.

Hosts a react app to overlay ML predicted communities on satellite data using the Google Maps API.

Python scripts to pull data from APIs and data curation.

For infrastructure diagram see README in github repo

TRY IT OUT

Codebase:

Contains

- MaskRCNN ML model + results

- React App

- Python scripts for data scraping and curation

Demo Instance:

TAGS

#computer vision, #machine learning, #react, #node, #javascript, #python, #earth science, #climate change