The Impute Inputters| Chasers of the Lost Data

Project Details

The Challenge | Chasers of the Lost Data

Imputation Inputters

We use computer vision techniques to correct damaged images. We apply similar techniques to stylize earth images like foreign bodies- helping to mix the new and the familiar.

Code, data, and presentation are in our git repo: https://github.com/craklyn/space-apps-2019

Summary:

Initially deciding to follow the Chasers of the Last Data challenge, our team was inspired to apply machine learning and computer vision techniques to focus on improving and augmenting images from the Nasa Image Library.

In this process, we found multiple approaches that our models could be applied to. In the first challenge (Chasers of the Lost Data), we degraded 730 images of the surface of mars by coloring random 5 x 6 pixel regions black. We used these degraded images and their original full quality counterparts to train the Pix2Pix GAN (Generative Adversarial Network) model. The result was a model that can take an image compromised by the presence of dust on the lens or damaged pixel regions as input, reconstructs the damaged portions of the image, and outputs the improved image.

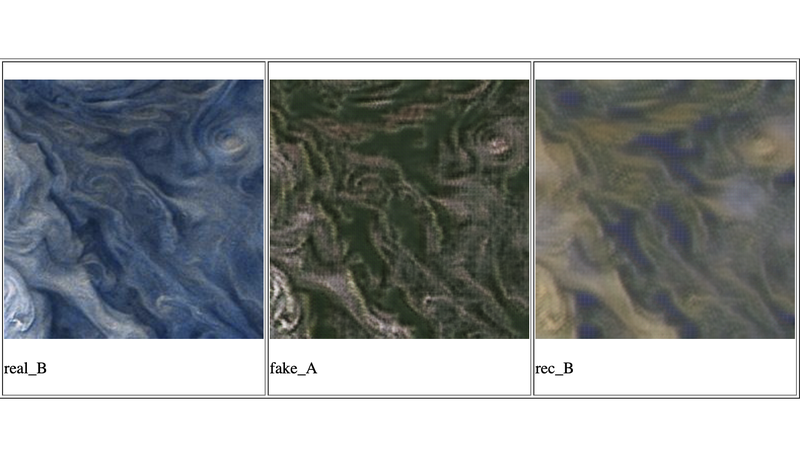

The next application fell in line with the "Show the World the Data" challenge. We wanted to provide an opportunity to better understand and interact with the surface of other planets using our home planet Earth. In this project, we scraped the tile imagery from Bing Maps and used imagery of Jupiter's surface to train a CycleGAN model. The result can be seen on the website we created, where we take the in-bounds tile images from the Bing Maps view, send them to our trained CycleGAN model, then replace the earth tile view with the images stylized to look like Jupiter's surface. As you navigate the map, each newly in-bounds tile will be sent to our CycleGAN model and continuously be populated to the view.