Uncertainty Hunters| Chasers of the Lost Data

Project Details

Awards & Nominations

Uncertainty Hunters has received the following awards and nominations. Way to go!

The Challenge | Chasers of the Lost Data

Chaser based on Uncertainty

In order to fill the empty fields we noticed that regressions have uncertainty, and based on the uncertainty of different regressions for all categories of the csv we made a neural network to get the information.

Background

CSV files have multiple categories. Each category depends on the other ones (sometimes they doesn't). With this we can determine a regression that satisfies both categories. For example if in our data we have distance x and time t, the returned regression must be a linear one with the velocity as scope. But sometimes the regressions aren't linear. Furthermore we can say that each regression has uncertainty

With this concept we can develop a model for a neural network where each neuron receives three entries:

- Value:The current possible value of the empty field.

- Low limit: The difference between the value and the uncertainty, its the lowest number that the value can have in the empty field.

- Up limit: The sum between the value and the uncertainty, its the highest number that the value can have in the empty field

Each time that you add a new possible value and a new possible uncertainty, the model merge between the current possible value and current uncertainty to get an accurate one.

How it works?

When you have two possible values and uncertainties based on different regressions. You will have two possible situations:

There is an interception between both uncertainties:

The uncertainty becomes smaller so it will be reduce, and the new possible value will be in the middle of both limits.

There is no interception between the uncertainties:

If there is no interception the new uncertainty will become the union of both uncertainties, so it becomes bigger.

how to improve the algorithm?

Each time the uncertainty becomes smaller it starts reaching zero. As the uncertainty decreases the returned data closer to the real lost value, but when the uncertainty becomes greater the given value remains lost.

To overcome this error, you must leave in the CSV all the categories that you are sure that have a relation. Then the uncertainty starts getting smaller and smaller each time.

Other way to improve the algorithm is to add more regression models, or if you know how the categories are related you must just chose that regression

Resources

- Pandas

- sklearn

- csv

- Pypi

- GitHub

- Numpy

Documentation:

Repository Link: https://github.com/suulcoder/Chasers-of-the-Lost-Data

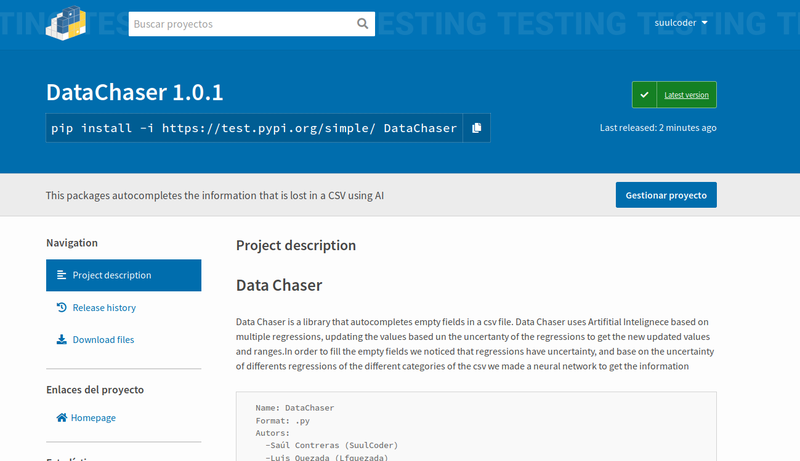

Library link: https://test.pypi.org/project/DataChaser/

Prerequisites:

pip3 install pandas pip3 install pandas pip3 install numpy pip3 install --user -i https://test.pypi.org/simple/ DataChaserHow to Use?

You must import the library:

from DataChaser import *

To start with DataChaser you must instantiate a class after import the library

DataChaser = Chaser({Path of input File} ,{Path of output File})Then run the following methods of the class:

# You must run this methods if you want the chaser to work DataChaser.trainDataBuilder() DataChaser.getDataToChase() # Here starts the regressions you are able to delete all # those that does not work with your data DataChaser.LinearRegression() DataChaser.cubicRegression() DataChaser.quadraticRegression() # This methods will write a new document in the output file DataChaser.store()Faced Challenges:

The first challenge that this library has to face is that this methods does not work without a big amount of data, To make it work you must have thousands or millions of data.